|

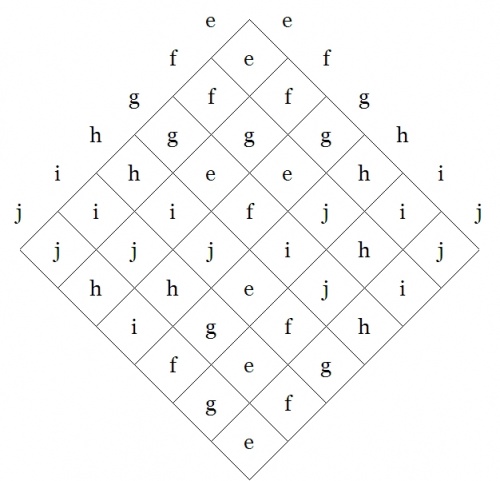

\(\begin{matrix}

\mathrm{G}

& = & \mathrm{e}:\mathrm{e}

& + & \mathrm{f}:\mathrm{f}

& + & \mathrm{g}:\mathrm{g}

& + & \mathrm{h}:\mathrm{h}

\'"`UNIQ-MathJax2-QINU`"' is the relate, \(j\!\) is the correlate, and in our current example \(i\!:\!j,\!\) or more exactly, \(m_{ij} = 1,\!\) is taken to say that \(i\!\) is a marker for \(j.\!\) This is the mode of reading that we call “multiplying on the left”.

In the algebraic, permutational, or transformational contexts of application, however, Peirce converts to the alternative mode of reading, although still calling \(i\!\) the relate and \(j\!\) the correlate, the elementary relative \(i\!:\!j\!\) now means that \(i\!\) gets changed into \(j.\!\) In this scheme of reading, the transformation \(a\!:\!b + b\!:\!c + c\!:\!a\!\) is a permutation of the aggregate \(\mathbf{1} = a + b + c,\!\) or what we would now call the set \(\{ a, b, c \},\!\) in particular, it is the permutation that is otherwise notated as follows:

|

\(\begin{Bmatrix}

a & b & c

\\

b & c & a

\end{Bmatrix}\!\)

|

This is consistent with the convention that Peirce uses in the paper “On a Class of Multiple Algebras” (CP 3.324–327).

We've been exploring the applications of a certain technique for clarifying abstruse concepts, a rough-cut version of the pragmatic maxim that I've been accustomed to refer to as the operationalization of ideas. The basic idea is to replace the question of What it is, which modest people comprehend is far beyond their powers to answer definitively any time soon, with the question of What it does, which most people know at least a modicum about.

In the case of regular representations of groups we found a non-plussing surplus of answers to sort our way through. So let us track back one more time to see if we can learn any lessons that might carry over to more realistic cases.

Here is is the operation table of \(V_4\!\) once again:

\(\text{Klein Four-Group}~ V_4\!\)

|

\(\cdot\!\)

|

\(\mathrm{e}\!\)

|

\(\mathrm{f}\!\)

|

\(\mathrm{g}\!\)

|

\(\mathrm{h}\!\)

|

| \(\mathrm{e}\!\)

|

\(\mathrm{e}\!\)

|

\(\mathrm{f}\!\)

|

\(\mathrm{g}\!\)

|

\(\mathrm{h}\!\)

|

| \(\mathrm{f}\!\)

|

\(\mathrm{f}\!\)

|

\(\mathrm{e}\!\)

|

\(\mathrm{h}\!\)

|

\(\mathrm{g}\!\)

|

| \(\mathrm{g}\!\)

|

\(\mathrm{g}\!\)

|

\(\mathrm{h}\!\)

|

\(\mathrm{e}\!\)

|

\(\mathrm{f}\!\)

|

| \(\mathrm{h}\!\)

|

\(\mathrm{h}\!\)

|

\(\mathrm{g}\!\)

|

\(\mathrm{f}\!\)

|

\(\mathrm{e}\!\)

|

A group operation table is really just a device for recording a certain 3-adic relation, to be specific, the set of triples of the form \((x, y, z)\!\) satisfying the equation \(x \cdot y = z.\!\)

In the case of \(V_4 = (G, \cdot),\!\) where \(G\!\) is the underlying set \(\{ \mathrm{e}, \mathrm{f}, \mathrm{g}, \mathrm{h} \},\!\) we have the 3-adic relation \(L(V_4) \subseteq G \times G \times G\!\) whose triples are listed below:

|

\(\begin{matrix}

(\mathrm{e}, \mathrm{e}, \mathrm{e}) &

(\mathrm{e}, \mathrm{f}, \mathrm{f}) &

(\mathrm{e}, \mathrm{g}, \mathrm{g}) &

(\mathrm{e}, \mathrm{h}, \mathrm{h})

\\[6pt]

(\mathrm{f}, \mathrm{e}, \mathrm{f}) &

(\mathrm{f}, \mathrm{f}, \mathrm{e}) &

(\mathrm{f}, \mathrm{g}, \mathrm{h}) &

(\mathrm{f}, \mathrm{h}, \mathrm{g})

\\[6pt]

(\mathrm{g}, \mathrm{e}, \mathrm{g}) &

(\mathrm{g}, \mathrm{f}, \mathrm{h}) &

(\mathrm{g}, \mathrm{g}, \mathrm{e}) &

(\mathrm{g}, \mathrm{h}, \mathrm{f})

\\[6pt]

(\mathrm{h}, \mathrm{e}, \mathrm{h}) &

(\mathrm{h}, \mathrm{f}, \mathrm{g}) &

(\mathrm{h}, \mathrm{g}, \mathrm{f}) &

(\mathrm{h}, \mathrm{h}, \mathrm{e})

\end{matrix}\!\)

|

It is part of the definition of a group that the 3-adic relation \(L \subseteq G^3\!\) is actually a function \(L : G \times G \to G.\!\) It is from this functional perspective that we can see an easy way to derive the two regular representations. Since we have a function of the type \(L : G \times G \to G,\!\) we can define a couple of substitution operators:

| 1.

|

\(\mathrm{Sub}(x, (\underline{~~}, y))\!\) puts any specified \(x\!\) into the empty slot of the rheme \((\underline{~~}, y),\!\) with the effect of producing the saturated rheme \((x, y)\!\) that evaluates to \(xy.~\!\)

|

| 2.

|

\(\mathrm{Sub}(x, (y, \underline{~~}))\!\) puts any specified \(x\!\) into the empty slot of the rheme \((y, \underline{~~}),\!\) with the effect of producing the saturated rheme \((y, x)\!\) that evaluates to \(yx.~\!\)

|

In (1) we consider the effects of each \(x\!\) in its practical bearing on contexts of the form \((\underline{~~}, y),\!\) as \(y\!\) ranges over \(G,\!\) and the effects are such that \(x\!\) takes \((\underline{~~}, y)\!\) into \(xy,\!\) for \(y\!\) in \(G,\!\) all of which is notated as \(x = \{ (y : xy) ~|~ y \in G \}.\!\) The pairs \((y : xy)\!\) can be found by picking an \(x\!\) from the left margin of the group operation table and considering its effects on each \(y\!\) in turn as these run across the top margin. This aspect of pragmatic definition we recognize as the regular ante-representation:

|

\(\begin{matrix}

\mathrm{e}

& = & \mathrm{e}\!:\!\mathrm{e}

& + & \mathrm{f}\!:\!\mathrm{f}

& + & \mathrm{g}\!:\!\mathrm{g}

& + & \mathrm{h}\!:\!\mathrm{h}

\\[4pt]

\mathrm{f}

& = & \mathrm{e}\!:\!\mathrm{f}

& + & \mathrm{f}\!:\!\mathrm{e}

& + & \mathrm{g}\!:\!\mathrm{h}

& + & \mathrm{h}\!:\!\mathrm{g}

\\[4pt]

\mathrm{g}

& = & \mathrm{e}\!:\!\mathrm{g}

& + & \mathrm{f}\!:\!\mathrm{h}

& + & \mathrm{g}\!:\!\mathrm{e}

& + & \mathrm{h}\!:\!\mathrm{f}

\\[4pt]

\mathrm{h}

& = & \mathrm{e}\!:\!\mathrm{h}

& + & \mathrm{f}\!:\!\mathrm{g}

& + & \mathrm{g}\!:\!\mathrm{f}

& + & \mathrm{h}\!:\!\mathrm{e}

\end{matrix}\!\)

|

In (2) we consider the effects of each \(x\!\) in its practical bearing on contexts of the form \((y, \underline{~~}),\!\) as \(y\!\) ranges over \(G,\!\) and the effects are such that \(x\!\) takes \((y, \underline{~~})\!\) into \(yx,\!\) for \(y\!\) in \(G,\!\) all of which is notated as \(x = \{ (y : yx) ~|~ y \in G \}.\!\) The pairs \((y : yx)\!\) can be found by picking an \(x\!\) from the top margin of the group operation table and considering its effects on each \(y\!\) in turn as these run down the left margin. This aspect of pragmatic definition we recognize as the regular post-representation:

|

\(\begin{matrix}

\mathrm{e}

& = & \mathrm{e}\!:\!\mathrm{e}

& + & \mathrm{f}\!:\!\mathrm{f}

& + & \mathrm{g}\!:\!\mathrm{g}

& + & \mathrm{h}\!:\!\mathrm{h}

\\[4pt]

\mathrm{f}

& = & \mathrm{e}\!:\!\mathrm{f}

& + & \mathrm{f}\!:\!\mathrm{e}

& + & \mathrm{g}\!:\!\mathrm{h}

& + & \mathrm{h}\!:\!\mathrm{g}

\\[4pt]

\mathrm{g}

& = & \mathrm{e}\!:\!\mathrm{g}

& + & \mathrm{f}\!:\!\mathrm{h}

& + & \mathrm{g}\!:\!\mathrm{e}

& + & \mathrm{h}\!:\!\mathrm{f}

\\[4pt]

\mathrm{h}

& = & \mathrm{e}\!:\!\mathrm{h}

& + & \mathrm{f}\!:\!\mathrm{g}

& + & \mathrm{g}\!:\!\mathrm{f}

& + & \mathrm{h}\!:\!\mathrm{e}

\end{matrix}\!\)

|

If the ante-rep looks the same as the post-rep, now that I'm writing them in the same dialect, that is because \(V_4\!\) is abelian (commutative), and so the two representations have the very same effects on each point of their bearing.

So long as we're in the neighborhood, we might as well take in some more of the sights, for instance, the smallest example of a non-abelian (non-commutative) group. This is a group of six elements, say, \(G = \{ \mathrm{e}, \mathrm{f}, \mathrm{g}, \mathrm{h}, \mathrm{i}, \mathrm{j} \},\!\) with no relation to any other employment of these six symbols being implied, of course, and it can be most easily represented as the permutation group on a set of three letters, say, \(X = \{ a, b, c \},\!\) usually notated as \(G = \mathrm{Sym}(X)\!\) or more abstractly and briefly, as \(\mathrm{Sym}(3)\!\) or \(S_3.\!\) The next Table shows the intended correspondence between abstract group elements and the permutation or substitution operations in \(\mathrm{Sym}(X).\!\)

\(\text{Permutation Substitutions in}~ \mathrm{Sym} \{ a, b, c \}\!\)

| \(\mathrm{e}\!\)

|

\(\mathrm{f}\!\)

|

\(\mathrm{g}\!\)

|

\(\mathrm{h}\!\)

|

\(\mathrm{i}~\!\)

|

\(\mathrm{j}\!\)

|

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

a & b & c

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

c & a & b

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

b & c & a

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

a & c & b

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

c & b & a

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

b & a & c

\end{matrix}\!\)

|

Here is the operation table for \(S_3,\!\) given in abstract fashion:

| \(\text{Symmetric Group}~ S_3\!\)

|

|

By the way, we will meet with the symmetric group \(S_3~\!\) again when we return to take up the study of Peirce's early paper “On a Class of Multiple Algebras” (CP 3.324–327), and also his late unpublished work “The Simplest Mathematics” (1902) (CP 4.227–323), with particular reference to the section that treats of “Trichotomic Mathematics” (CP 4.307–323).

By way of collecting a short-term pay-off for all the work that we did on the regular representations of the Klein 4-group \(V_4,\!\) let us write out as quickly as possible in relative form a minimal budget of representations for the symmetric group on three letters, \(\mathrm{Sym}(3).\!\) After doing the usual bit of compare and contrast among the various representations, we will have enough concrete material beneath our abstract belts to tackle a few of the presently obscured details of Peirce's early “Algebra + Logic” papers.

Writing the permutations or substitutions of \(\mathrm{Sym} \{ a, b, c \}\!\) in relative form generates what is generally thought of as a natural representation of \(S_3.~\!\)

|

\(\begin{matrix}

\mathrm{e}

& = & a\!:\!a

& + & b\!:\!b

& + & c\!:\!c

\\[4pt]

\mathrm{f}

& = & a\!:\!c

& + & b\!:\!a

& + & c\!:\!b

\\[4pt]

\mathrm{g}

& = & a\!:\!b

& + & b\!:\!c

& + & c\!:\!a

\\[4pt]

\mathrm{h}

& = & a\!:\!a

& + & b\!:\!c

& + & c\!:\!b

\\[4pt]

\mathrm{i}

& = & a\!:\!c

& + & b\!:\!b

& + & c\!:\!a

\\[4pt]

\mathrm{j}

& = & a\!:\!b

& + & b\!:\!a

& + & c\!:\!c

\end{matrix}\!\)

|

I have without stopping to think about it written out this natural representation of \(S_3~\!\) in the style that comes most naturally to me, to wit, the “right” way, whereby an ordered pair configured as \(x\!:\!y\!\) constitutes the turning of \(x\!\) into \(y.\!\) It is possible that the next time we check in with CSP we will have to adjust our sense of direction, but that will be an easy enough bridge to cross when we come to it.

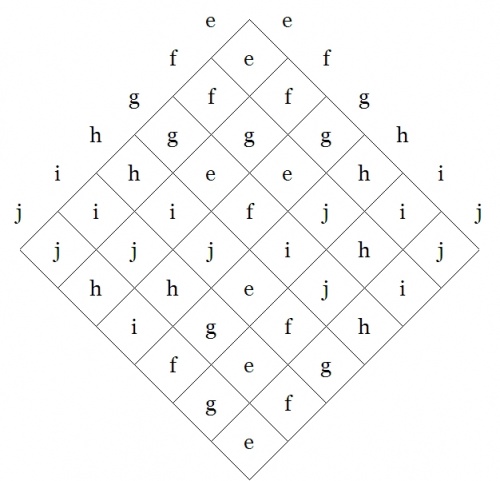

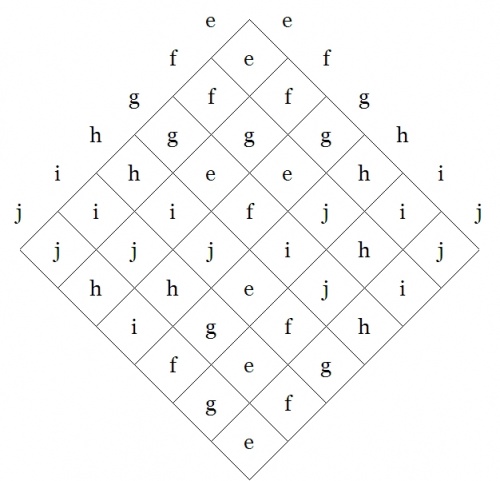

To construct the regular representations of \(S_3,~\!\) we begin with the data of its operation table:

| \(\text{Symmetric Group}~ S_3\!\)

|

|

Just by way of staying clear about what we are doing, let's return to the recipe that we worked out before:

It is part of the definition of a group that the 3-adic relation \(L \subseteq G^3\!\) is actually a function \(L : G \times G \to G.\!\) It is from this functional perspective that we can see an easy way to derive the two regular representations.

Since we have a function of the type \(L : G \times G \to G,\!\) we can define a couple of substitution operators:

| 1.

|

\(\mathrm{Sub}(x, (\underline{~~}, y))\!\) puts any specified \(x\!\) into the empty slot of the rheme \((\underline{~~}, y),\!\) with the effect of producing the saturated rheme \((x, y)\!\) that evaluates to \(xy.~\!\)

|

| 2.

|

\(\mathrm{Sub}(x, (y, \underline{~~}))\!\) puts any specified \(x\!\) into the empty slot of the rheme \((y, \underline{~~}),\!\) with the effect of producing the saturated rheme \((y, x)\!\) that evaluates to \(yx.~\!\)

|

In (1) we consider the effects of each \(x\!\) in its practical bearing on contexts of the form \((\underline{~~}, y),\!\) as \(y\!\) ranges over \(G,\!\) and the effects are such that \(x\!\) takes \((\underline{~~}, y)\!\) into \(xy,\!\) for \(y\!\) in \(G,\!\) all of which is notated as \(x = \{ (y : xy) ~|~ y \in G \}.\!\) The pairs \((y : xy)\!\) can be found by picking an \(x\!\) from the left margin of the group operation table and considering its effects on each \(y\!\) in turn as these run along the right margin. This produces the regular ante-representation of \(S_3,\!\) like so:

|

\(\begin{array}{*{13}{c}}

\mathrm{e}

& = & \mathrm{e}\!:\!\mathrm{e}

& + & \mathrm{f}\!:\!\mathrm{f}

& + & \mathrm{g}\!:\!\mathrm{g}

& + & \mathrm{h}\!:\!\mathrm{h}

& + & \mathrm{i}\!:\!\mathrm{i}

& + & \mathrm{j}\!:\!\mathrm{j}

\\[4pt]

\mathrm{f}

& = & \mathrm{e}\!:\!\mathrm{f}

& + & \mathrm{f}\!:\!\mathrm{g}

& + & \mathrm{g}\!:\!\mathrm{e}

& + & \mathrm{h}\!:\!\mathrm{j}

& + & \mathrm{i}\!:\!\mathrm{h}

& + & \mathrm{j}\!:\!\mathrm{i}

\\[4pt]

\mathrm{g}

& = & \mathrm{e}\!:\!\mathrm{g}

& + & \mathrm{f}\!:\!\mathrm{e}

& + & \mathrm{g}\!:\!\mathrm{f}

& + & \mathrm{h}\!:\!\mathrm{i}

& + & \mathrm{i}\!:\!\mathrm{j}

& + & \mathrm{j}\!:\!\mathrm{h}

\\[4pt]

\mathrm{h}

& = & \mathrm{e}\!:\!\mathrm{h}

& + & \mathrm{f}\!:\!\mathrm{i}

& + & \mathrm{g}\!:\!\mathrm{j}

& + & \mathrm{h}\!:\!\mathrm{e}

& + & \mathrm{i}\!:\!\mathrm{f}

& + & \mathrm{j}\!:\!\mathrm{g}

\\[4pt]

\mathrm{i}

& = & \mathrm{e}\!:\!\mathrm{i}

& + & \mathrm{f}\!:\!\mathrm{j}

& + & \mathrm{g}\!:\!\mathrm{h}

& + & \mathrm{h}\!:\!\mathrm{g}

& + & \mathrm{i}\!:\!\mathrm{e}

& + & \mathrm{j}\!:\!\mathrm{f}

\\[4pt]

\mathrm{j}

& = & \mathrm{e}\!:\!\mathrm{j}

& + & \mathrm{f}\!:\!\mathrm{h}

& + & \mathrm{g}\!:\!\mathrm{i}

& + & \mathrm{h}\!:\!\mathrm{f}

& + & \mathrm{i}\!:\!\mathrm{g}

& + & \mathrm{j}\!:\!\mathrm{e}

\end{array}\!\)

|

In (2) we consider the effects of each \(x\!\) in its practical bearing on contexts of the form \((y, \underline{~~}),\!\) as \(y\!\) ranges over \(G,\!\) and the effects are such that \(x\!\) takes \((y, \underline{~~})\!\) into \(yx,\!\) for \(y\!\) in \(G,\!\) all of which is notated as \(x = \{ (y : yx) ~|~ y \in G \}.\!\) The pairs \((y : yx)\!\) can be found by picking an \(x\!\) on the right margin of the group operation table and considering its effects on each \(y\!\) in turn as these run along the left margin. This produces the regular post-representation of \(S_3,\!\) like so:

|

\(\begin{array}{*{13}{c}}

\mathrm{e}

& = & \mathrm{e}\!:\!\mathrm{e}

& + & \mathrm{f}\!:\!\mathrm{f}

& + & \mathrm{g}\!:\!\mathrm{g}

& + & \mathrm{h}\!:\!\mathrm{h}

& + & \mathrm{i}\!:\!\mathrm{i}

& + & \mathrm{j}\!:\!\mathrm{j}

\\[4pt]

\mathrm{f}

& = & \mathrm{e}\!:\!\mathrm{f}

& + & \mathrm{f}\!:\!\mathrm{g}

& + & \mathrm{g}\!:\!\mathrm{e}

& + & \mathrm{h}\!:\!\mathrm{i}

& + & \mathrm{i}\!:\!\mathrm{j}

& + & \mathrm{j}\!:\!\mathrm{h}

\\[4pt]

\mathrm{g}

& = & \mathrm{e}\!:\!\mathrm{g}

& + & \mathrm{f}\!:\!\mathrm{e}

& + & \mathrm{g}\!:\!\mathrm{f}

& + & \mathrm{h}\!:\!\mathrm{j}

& + & \mathrm{i}\!:\!\mathrm{h}

& + & \mathrm{j}\!:\!\mathrm{i}

\\[4pt]

\mathrm{h}

& = & \mathrm{e}\!:\!\mathrm{h}

& + & \mathrm{f}\!:\!\mathrm{j}

& + & \mathrm{g}\!:\!\mathrm{i}

& + & \mathrm{h}\!:\!\mathrm{e}

& + & \mathrm{i}\!:\!\mathrm{g}

& + & \mathrm{j}\!:\!\mathrm{f}

\\[4pt]

\mathrm{i}

& = & \mathrm{e}\!:\!\mathrm{i}

& + & \mathrm{f}\!:\!\mathrm{h}

& + & \mathrm{g}\!:\!\mathrm{j}

& + & \mathrm{h}\!:\!\mathrm{f}

& + & \mathrm{i}\!:\!\mathrm{e}

& + & \mathrm{j}\!:\!\mathrm{g}

\\[4pt]

\mathrm{j}

& = & \mathrm{e}\!:\!\mathrm{j}

& + & \mathrm{f}\!:\!\mathrm{i}

& + & \mathrm{g}\!:\!\mathrm{h}

& + & \mathrm{h}\!:\!\mathrm{g}

& + & \mathrm{i}\!:\!\mathrm{f}

& + & \mathrm{j}\!:\!\mathrm{e}

\end{array}\!\)

|

If the ante-rep looks different from the post-rep, it is just as it should be, as \(S_3~\!\) is non-abelian (non-commutative), and so the two representations differ in the details of their practical effects, though, of course, being representations of the same abstract group, they must be isomorphic.

|

|

the way of heaven and earth

is to be long continued

in their operation

without stopping

|

|

|

— i ching, hexagram 32

|

The Reader may be wondering what happened to the announced subject of Dynamics And Logic. What happened was a bit like this:

We made the observation that the shift operators \(\{ \mathrm{E}_{ij} \}\!\) form a transformation group that acts on the set of propositions of the form \(f : \mathbb{B} \times \mathbb{B} \to \mathbb{B}.\!\) Group theory is a very attractive subject, but it did not draw us so far from our intended course as one might initially think. For one thing, groups, especially the groups that are named after the Norwegian mathematician Marius Sophus Lie (1842–1899), have turned out to be of critical utility in the solution of differential equations. For another thing, group operations provide us with an ample supply of triadic relations that have been extremely well-studied over the years, and thus they give us no small measure of useful guidance in the study of sign relations, another brand of 3-adic relations that have significance for logical studies, and in our acquaintance with which we have barely begun to break the ice. Finally, I couldn't resist taking up the links between group representations, amounting to the very archetypes of logical models, and the pragmatic maxim.

We've seen a couple of groups, \(V_4\!\) and \(S_3,\!\) represented in various ways, and we've seen their representations presented in a variety of different manners. Let us look at one other stylistic variant for presenting a representation that is frequently seen, the so-called matrix representation of a group.

Recalling the manner of our acquaintance with the symmetric group \(S_3,\!\) we began with the bigraph (bipartite graph) picture of its natural representation as the set of all permutations or substitutions on the set \(X = \{ a, b, c \}.\!\)

\(\text{Permutation Substitutions in}~ \mathrm{Sym} \{ a, b, c \}\!\)

| \(\mathrm{e}\!\)

|

\(\mathrm{f}\!\)

|

\(\mathrm{g}\!\)

|

\(\mathrm{h}\!\)

|

\(\mathrm{i}~\!\)

|

\(\mathrm{j}\!\)

|

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

a & b & c

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

c & a & b

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

b & c & a

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

a & c & b

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

c & b & a

\end{matrix}\!\)

|

\(\begin{matrix}

a & b & c

\\[3pt]

\downarrow & \downarrow & \downarrow

\\[6pt]

b & a & c

\end{matrix}\!\)

|

These permutations were then converted to relative form as logical sums of elementary relatives:

|

\(\begin{matrix}

\mathrm{e}

& = & a\!:\!a

& + & b\!:\!b

& + & c\!:\!c

\\[4pt]

\mathrm{f}

& = & a\!:\!c

& + & b\!:\!a

& + & c\!:\!b

\\[4pt]

\mathrm{g}

& = & a\!:\!b

& + & b\!:\!c

& + & c\!:\!a

\\[4pt]

\mathrm{h}

& = & a\!:\!a

& + & b\!:\!c

& + & c\!:\!b

\\[4pt]

\mathrm{i}

& = & a\!:\!c

& + & b\!:\!b

& + & c\!:\!a

\\[4pt]

\mathrm{j}

& = & a\!:\!b

& + & b\!:\!a

& + & c\!:\!c

\end{matrix}\!\)

|

From the relational representation of \(\mathrm{Sym} \{ a, b, c \} \cong S_3,\!\) one easily derives a linear representation of the group by viewing each permutation as a linear transformation that maps the elements of a suitable vector space onto each other. Each of these linear transformations is in turn represented by a 2-dimensional array of coefficients in \(\mathbb{B},\!\) resulting in the following set of matrices for the group:

\(\text{Matrix Representations of Permutations in}~ \mathrm{Sym}(3)\!\)

| \(\mathrm{e}\!\)

|

\(\mathrm{f}\!\)

|

\(\mathrm{g}\!\)

|

\(\mathrm{h}\!\)

|

\(\mathrm{i}~\!\)

|

\(\mathrm{j}\!\)

|

|

\(\begin{matrix}

1 & 0 & 0

\\

0 & 1 & 0

\\

0 & 0 & 1

\end{matrix}~\!\)

|

\(\begin{matrix}

0 & 0 & 1

\\

1 & 0 & 0

\\

0 & 1 & 0

\end{matrix}~\!\)

|

\(\begin{matrix}

0 & 1 & 0

\\

0 & 0 & 1

\\

1 & 0 & 0

\end{matrix}\!\)

|

\(\begin{matrix}

1 & 0 & 0

\\

0 & 0 & 1

\\

0 & 1 & 0

\end{matrix}~\!\)

|

\(\begin{matrix}

0 & 0 & 1

\\

0 & 1 & 0

\\

1 & 0 & 0

\end{matrix}~\!\)

|

\(\begin{matrix}

0 & 1 & 0

\\

1 & 0 & 0

\\

0 & 0 & 1

\end{matrix}~\!\)

|

The key to the mysteries of these matrices is revealed by observing that their coefficient entries are arrayed and overlaid on a place-mat marked like so:

|

\(\begin{bmatrix}

a\!:\!a &

a\!:\!b &

a\!:\!c

\\

b\!:\!a &

b\!:\!b &

b\!:\!c

\\

c\!:\!a &

c\!:\!b &

c\!:\!c

\end{bmatrix}\!\)

|

Quick Review : Field Picture

Let us summarize, in rough but intuitive terms, the outlook on differential logic that we have reached so far. We've been considering a class of operators on universes of discourse, each of which takes us from considering one universe of discourse, \(X^\circ,\!\) to considering a larger universe of discourse, \(\mathrm{E}X^\circ.\!\) An operator \(\mathrm{W}\!\) of this general type, namely, \(\mathrm{W} : X^\circ \to \mathrm{E}X^\circ,\!\) acts on each proposition \(f : X \to \mathbb{B}\!\) of the source universe \({X^\circ}\!\) to produce a proposition \(\mathrm{W}f : \mathrm{E}X \to \mathbb{B}\!\) of the target universe \(\mathrm{E}X^\circ.\!\)

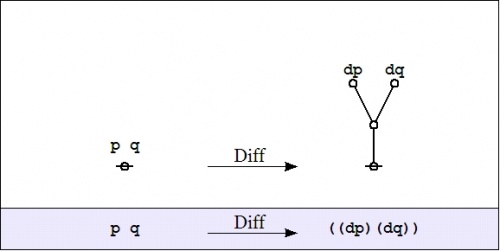

The two main operators that we've examined so far are the enlargement or shift operator \(\mathrm{E} : X^\circ \to \mathrm{E}X^\circ\!\) and the difference operator \(\mathrm{D} : X^\circ \to \mathrm{E}X^\circ.\!\) The operators \(\mathrm{E}\!\) and \(\mathrm{D}\!\) act on propositions in \(X^\circ,\!\) that is, propositions of the form \(f : X \to \mathbb{B}\!\) that are said to be about the subject matter of \(X,\!\) and they produce extended propositions of the forms \(\mathrm{E}f, \mathrm{D}f : \mathrm{E}X \to \mathbb{B},\!\) propositions whose extended sets of variables allow them to be read as being about specified collections of changes that conceivably occur in \(X.\!\)

At this point we find ourselves in need of visual representations, suitable arrays of concrete pictures to anchor our more earthy intuitions and to help us keep our wits about us as we venture higher into the ever more rarefied air of abstractions.

One good picture comes to us by way of the field concept. Given a space \(X,\!\) a field of a specified type \(Y\!\) over \(X\!\) is formed by associating with each point of \(X\!\) an object of type \(Y.\!\) If that sounds like the same thing as a function from \(X\!\) to the space of things of type \(Y\!\) — it is nothing but — and yet it does seem helpful to vary the mental images and to take advantage of the figures of speech that spring to mind under the emblem of this field idea.

In the field picture a proposition \(f : X \to \mathbb{B}\!\) becomes a scalar field, that is, a field of values in \(\mathbb{B}.\!\)

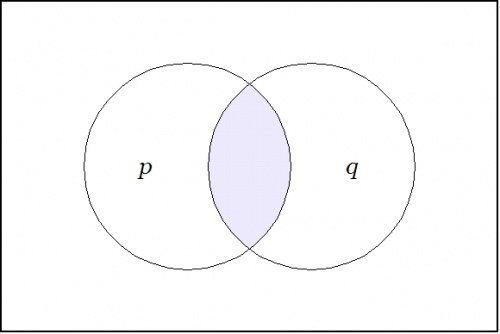

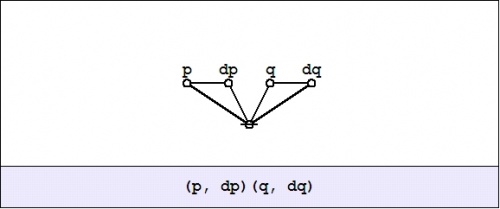

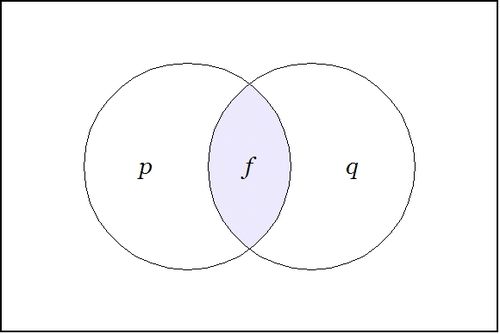

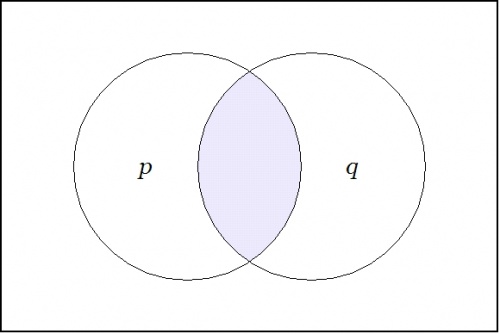

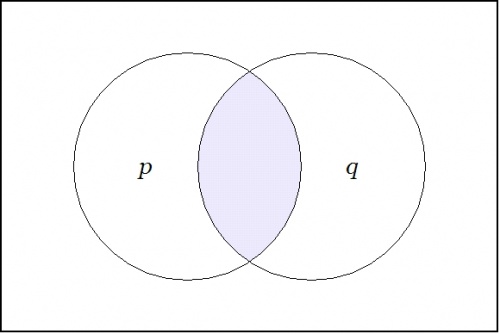

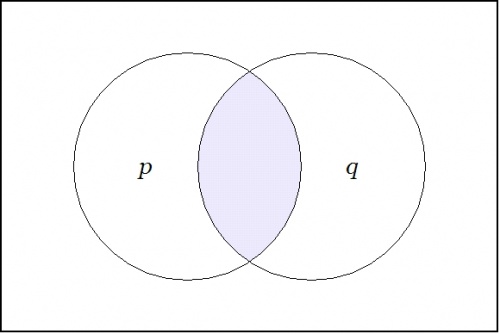

For example, consider the logical conjunction \(pq : X \to \mathbb{B}\!\) that is shown in the following venn diagram:

|

| \(\text{Conjunction}~ pq : X \to \mathbb{B}\!\)

|

Each of the operators \(\mathrm{E}, \mathrm{D} : X^\circ \to \mathrm{E}X^\circ\!\) takes us from considering propositions \(f : X \to \mathbb{B},\!\) here viewed as scalar fields over \(X,\!\) to considering the corresponding differential fields over \(X,\!\) analogous to what are usually called vector fields over \(X.\!\)

The structure of these differential fields can be described this way. With each point of \(X\!\) there is associated an object of the following type: a proposition about changes in \(X,\!\) that is, a proposition \(g : \mathrm{d}X \to \mathbb{B}.\!\) In this frame of reference, if \({X^\circ}\!\) is the universe that is generated by the set of coordinate propositions \(\{ p, q \},\!\) then \(\mathrm{d}X^\circ\!\) is the differential universe that is generated by the set of differential propositions \(\{ \mathrm{d}p, \mathrm{d}q \}.\!\) These differential propositions may be interpreted as indicating \({}^{\backprime\backprime} \text{change in}\, p \, {}^{\prime\prime}\!\) and \({}^{\backprime\backprime} \text{change in}\, q \, {}^{\prime\prime},\!\) respectively.

A differential operator \(\mathrm{W},\!\) of the first order class that we have been considering, takes a proposition \(f : X \to \mathbb{B}\!\) and gives back a differential proposition \(\mathrm{W}f : \mathrm{E}X \to \mathbb{B}.\!\) In the field view, we see the proposition \(f : X \to \mathbb{B}\!\) as a scalar field and we see the differential proposition \(\mathrm{W}f : \mathrm{E}X \to \mathbb{B}\!\) as a vector field, specifically, a field of propositions about contemplated changes in \(X.\!\)

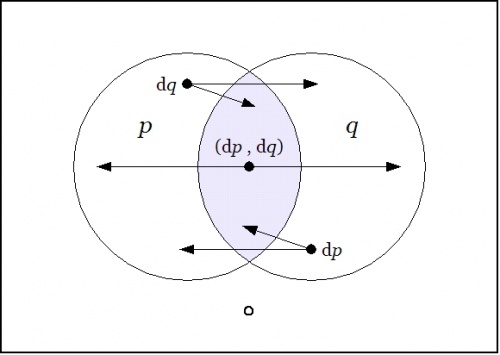

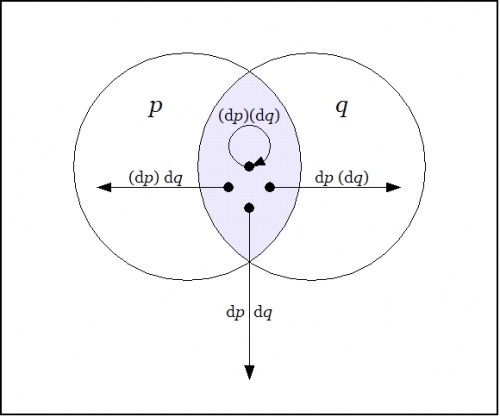

The field of changes produced by \(\mathrm{E}\!\) on \(pq\!\) is shown in the next venn diagram:

|

| \(\text{Enlargement}~ \mathrm{E}(pq) : \mathrm{E}X \to \mathbb{B}\!\)

|

|

\(\begin{array}{rcccccc}

\mathrm{E}(pq)

& = &

p

& \cdot &

q

& \cdot &

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{(} \mathrm{d}q \texttt{)}

\\[4pt]

& + &

p

& \cdot &

\texttt{(} q \texttt{)}

& \cdot &

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{~} \mathrm{d}q \texttt{~}

\\[4pt]

& + &

\texttt{(} p \texttt{)}

& \cdot &

q

& \cdot &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{(} \mathrm{d}q \texttt{)}

\\[4pt]

& + &

\texttt{(} p \texttt{)}

& \cdot &

\texttt{(} q \texttt{)}

& \cdot &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{~} \mathrm{d}q \texttt{~}

\end{array}\!\)

|

The differential field \(\mathrm{E}(pq)\!\) specifies the changes that need to be made from each point of \(X\!\) in order to reach one of the models of the proposition \(pq,\!\) that is, in order to satisfy the proposition \(pq.\!\)

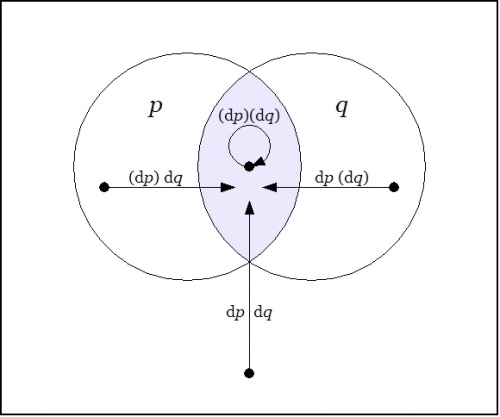

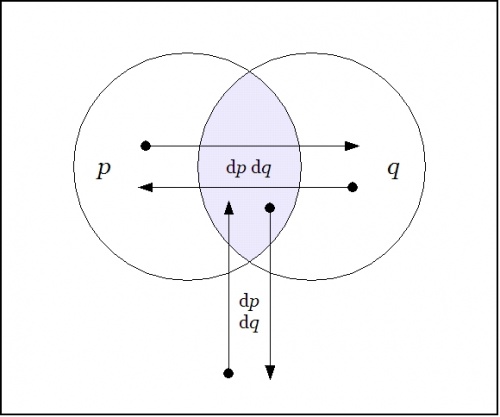

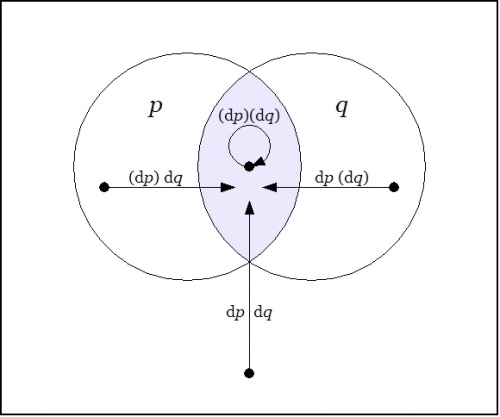

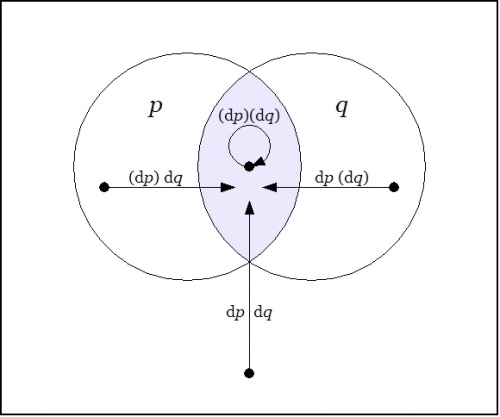

The field of changes produced by \(\mathrm{D}\!\) on \(pq\!\) is shown in the following venn diagram:

|

| \(\text{Difference}~ \mathrm{D}(pq) : \mathrm{E}X \to \mathbb{B}~\!\)

|

|

\(\begin{array}{rcccccc}

\mathrm{D}(pq)

& = &

p

& \cdot &

q

& \cdot &

\texttt{(}

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{(} \mathrm{d}q \texttt{)}

\texttt{)}

\\[4pt]

& + &

p

& \cdot &

\texttt{(} q \texttt{)}

& \cdot &

\texttt{~}

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{~} \mathrm{d}q \texttt{~}

\texttt{~}

\\[4pt]

& + &

\texttt{(} p \texttt{)}

& \cdot &

q

& \cdot &

\texttt{~}

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{(} \mathrm{d}q \texttt{)}

\texttt{~}

\\[4pt]

& + &

\texttt{(} p \texttt{)}

& \cdot &

\texttt{(}q \texttt{)}

& \cdot &

\texttt{~}

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{~} \mathrm{d}q \texttt{~}

\texttt{~}

\end{array}\!\)

|

The differential field \(\mathrm{D}(pq)\!\) specifies the changes that need to be made from each point of \(X\!\) in order to feel a change in the felt value of the field \(pq.\!\)

Proposition and Tacit Extension

Now that we've introduced the field picture as an aid to thinking about propositions and their analytic series, a very pleasing way of picturing the relationships among a proposition \(f : X \to \mathbb{B},\!\) its enlargement or shift map \(\mathrm{E}f : \mathrm{E}X \to \mathbb{B},\!\) and its difference map \(\mathrm{D}f : \mathrm{E}X \to \mathbb{B}\!\) can now be drawn.

To illustrate this possibility, let's return to the differential analysis of the conjunctive proposition \(f(p, q) = pq,\!\) giving the development a slightly different twist at the appropriate point.

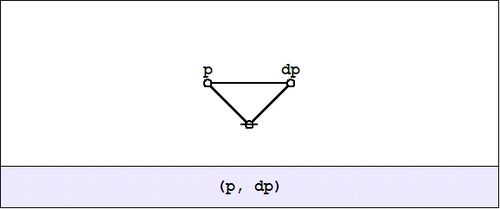

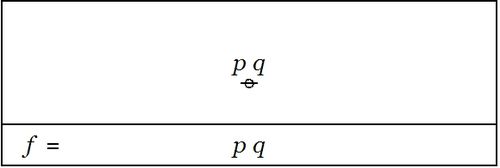

The next venn diagram shows once again the proposition \(pq,\!\) which we now view as a scalar field — analogous to a potential hill in physics, but in logic tantamount to a potential plateau — where the shaded region indicates an elevation of 1 and the unshaded region indicates an elevation of 0.

|

| \(\text{Proposition}~ pq : X \to \mathbb{B}\!\)

|

Given a proposition \(f : X \to \mathbb{B},\!\) the tacit extension of \(f\!\) to \(\mathrm{E}X\!\) is denoted \(\boldsymbol\varepsilon f : \mathrm{E}X \to \mathbb{B}~\!\) and defined by the equation \(\boldsymbol\varepsilon f = f,\!\) so it's really just the same proposition residing in a bigger universe. Tacit extensions formalize the intuitive idea that a function on a particular set of variables can be extended to a function on a superset of those variables in such a way that the new function obeys the same constraints on the old variables, with a "don't care" condition on the new variables.

The tacit extension of the scalar field \(pq : X \to \mathbb{B}\!\) to the differential field \(\boldsymbol\varepsilon (pq) : \mathrm{E}X \to \mathbb{B}\!\) is shown in the following venn diagram:

|

| \(\text{Tacit Extension}~ \boldsymbol\varepsilon (pq) : \mathrm{E}X \to \mathbb{B}~\!\)

|

|

\(\begin{array}{rcccccc}

\boldsymbol\varepsilon (pq)

& = &

p & \cdot & q & \cdot &

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{(} \mathrm{d}q \texttt{)}

\\[4pt]

& + &

p & \cdot & q & \cdot &

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{~} \mathrm{d}q \texttt{~}

\\[4pt]

& + &

p & \cdot & q & \cdot &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{(} \mathrm{d}q \texttt{)}

\\[4pt]

& + &

p & \cdot & q & \cdot &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{~} \mathrm{d}q \texttt{~}

\end{array}\!\)

|

Enlargement and Difference Maps

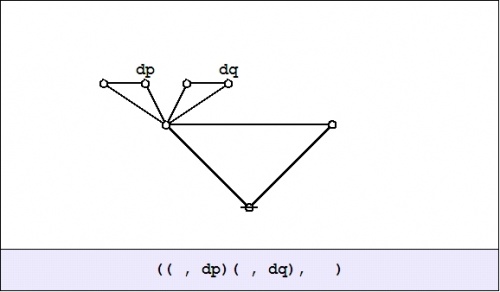

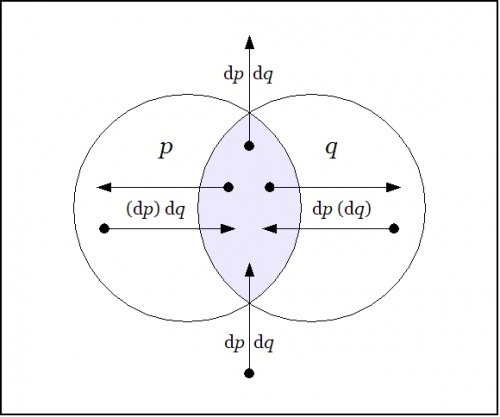

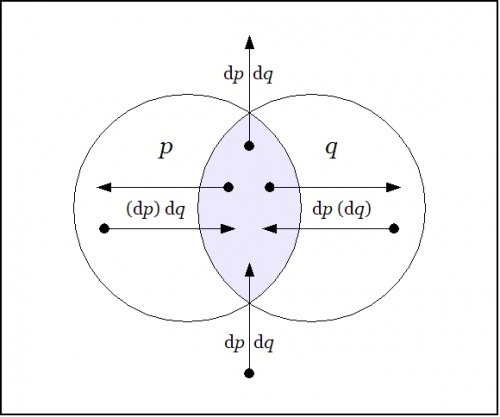

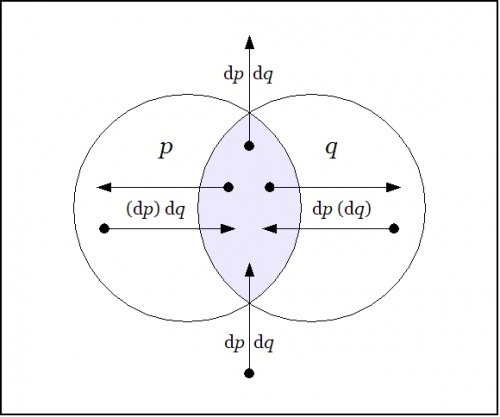

Continuing with the example \(pq : X \to \mathbb{B},\!\) the next venn diagram shows the enlargement or shift map \(\mathrm{E}(pq) : \mathrm{E}X \to \mathbb{B}\!\) in the same style of differential field picture that we drew for the tacit extension \(\boldsymbol\varepsilon (pq) : \mathrm{E}X \to \mathbb{B}.\!\)

|

| \(\text{Enlargement Map}~ \mathrm{E}(pq) : \mathrm{E}X \to \mathbb{B}\!\)

|

|

\(\begin{array}{rcccccc}

\mathrm{E}(pq)

& = &

p

& \cdot &

q

& \cdot &

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{(} \mathrm{d}q \texttt{)}

\\[4pt]

& + &

p

& \cdot &

\texttt{(} q \texttt{)}

& \cdot &

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{~} \mathrm{d}q \texttt{~}

\\[4pt]

& + &

\texttt{(} p \texttt{)}

& \cdot &

q

& \cdot &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{(} \mathrm{d}q \texttt{)}

\\[4pt]

& + &

\texttt{(} p \texttt{)}

& \cdot &

\texttt{(} q \texttt{)}

& \cdot &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{~} \mathrm{d}q \texttt{~}

\end{array}\!\)

|

A very important conceptual transition has just occurred here, almost tacitly, as it were. Generally speaking, having a set of mathematical objects of compatible types, in this case the two differential fields \(\boldsymbol\varepsilon f\!\) and \(\mathrm{E}f,\!\) both of the type \(\mathrm{E}X \to \mathbb{B},\!\) is very useful, because it allows us to consider these fields as integral mathematical objects that can be operated on and combined in the ways that we usually associate with algebras.

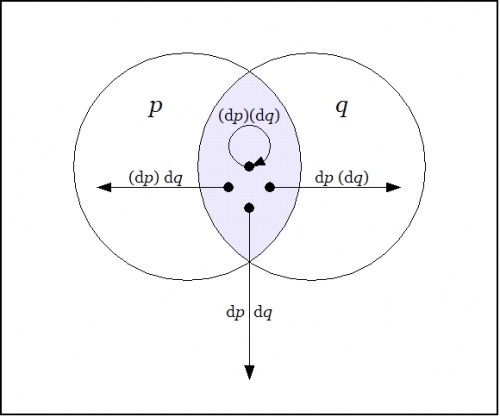

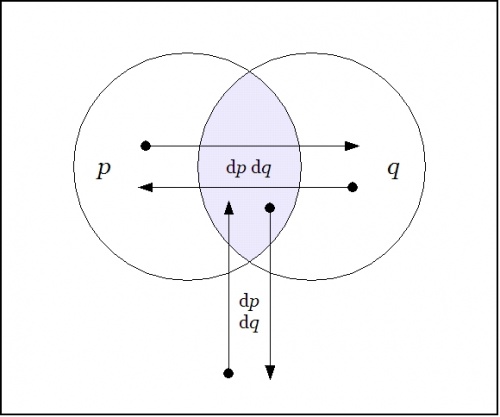

In this case one notices that the tacit extension \(\boldsymbol\varepsilon f\!\) and the enlargement \(\mathrm{E}f\!\) are in a certain sense dual to each other. The tacit extension \(\boldsymbol\varepsilon f\!\) indicates all the arrows out of the region where \(f\!\) is true and the enlargement \(\mathrm{E}f\!\) indicates all the arrows into the region where \(f\!\) is true. The only arc they have in common is the no-change loop \(\texttt{(} \mathrm{d}p \texttt{)(} \mathrm{d}q \texttt{)}\!\) at \(pq.\!\) If we add the two sets of arcs in mod 2 fashion then the loop of multiplicity 2 zeroes out, leaving the 6 arrows of \(\mathrm{D}(pq) = \boldsymbol\varepsilon(pq) + \mathrm{E}(pq)\!\) that are illustrated below:

|

| \(\text{Difference Map}~ \mathrm{D}(pq) : \mathrm{E}X \to \mathbb{B}\!\)

|

|

\(\begin{array}{rcccccc}

\mathrm{D}(pq)

& = &

p

& \cdot &

q

& \cdot &

\texttt{(}

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{(} \mathrm{d}q \texttt{)}

\texttt{)}

\\[4pt]

& + &

p

& \cdot &

\texttt{(} q \texttt{)}

& \cdot &

\texttt{~}

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{~} \mathrm{d}q \texttt{~}

\texttt{~}

\\[4pt]

& + &

\texttt{(} p \texttt{)}

& \cdot &

q

& \cdot &

\texttt{~}

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{(} \mathrm{d}q \texttt{)}

\texttt{~}

\\[4pt]

& + &

\texttt{(} p \texttt{)}

& \cdot &

\texttt{(}q \texttt{)}

& \cdot &

\texttt{~}

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{~} \mathrm{d}q \texttt{~}

\texttt{~}

\end{array}\!\)

|

Tangent and Remainder Maps

If we follow the classical line that singles out linear functions as ideals of simplicity, then we may complete the analytic series of the proposition \(f = pq : X \to \mathbb{B}\!\) in the following way.

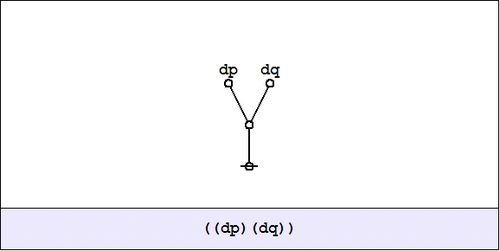

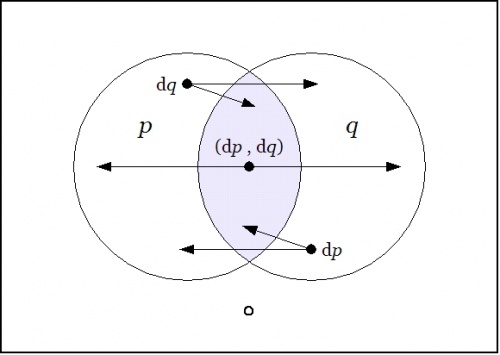

The next venn diagram shows the differential proposition \(\mathrm{d}f = \mathrm{d}(pq) : \mathrm{E}X \to \mathbb{B}\!\) that we get by extracting the cell-wise linear approximation to the difference map \(\mathrm{D}f = \mathrm{D}(pq) : \mathrm{E}X \to \mathbb{B}.\!\) This is the logical analogue of what would ordinarily be called the differential of \(pq,\!\) but since I've been attaching the adjective differential to just about everything in sight, the distinction tends to be lost. For the time being, I'll resort to using the alternative name tangent map for \(\mathrm{d}f.\!\)

|

| \(\text{Tangent Map}~ \mathrm{d}(pq) : \mathrm{E}X \to \mathbb{B}\!\)

|

Just to be clear about what's being indicated here, it's a visual way of summarizing the following data:

|

\(\begin{array}{rcccccc}

\mathrm{d}(pq)

& = &

p & \cdot & q & \cdot &

\texttt{(} \mathrm{d}p \texttt{,} \mathrm{d}q \texttt{)}

\\[4pt]

& + &

p & \cdot & \texttt{(} q \texttt{)} & \cdot &

\mathrm{d}q

\\[4pt]

& + &

\texttt{(} p \texttt{)} & \cdot & q & \cdot &

\mathrm{d}p

\\[4pt]

& + &

\texttt{(} p \texttt{)} & \cdot & \texttt{(} q \texttt{)} & \cdot & 0

\end{array}\!\)

|

To understand the extended interpretations, that is, the conjunctions of basic and differential features that are being indicated here, it may help to note the following equivalences:

|

\(\begin{matrix}

\texttt{(}

\mathrm{d}p

\texttt{,}

\mathrm{d}q

\texttt{)}

& = &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{(} \mathrm{d}q \texttt{)}

& + &

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{~} \mathrm{d}q \texttt{~}

\\[4pt]

dp

& = &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{~} \mathrm{d}q \texttt{~}

& + &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{(} \mathrm{d}q \texttt{)}

\\[4pt]

\mathrm{d}q

& = &

\texttt{~} \mathrm{d}p \texttt{~}

\texttt{~} \mathrm{d}q \texttt{~}

& + &

\texttt{(} \mathrm{d}p \texttt{)}

\texttt{~} \mathrm{d}q \texttt{~}

\end{matrix}\!\)

|

Capping the series that analyzes the proposition \(pq\!\) in terms of succeeding orders of linear propositions, the final venn diagram in this series shows the remainder map \(\mathrm{r}(pq) : \mathrm{E}X \to \mathbb{B},\!\) that happens to be linear in pairs of variables.

|

| \(\text{Remainder Map}~ \mathrm{r}(pq) : \mathrm{E}X \to \mathbb{B}\!\)

|

Reading the arrows off the map produces the following data:

|

\(\begin{array}{rcccccc}

\mathrm{r}(pq)

& = &

p & \cdot & q & \cdot &

\mathrm{d}p ~ \mathrm{d}q

\\[4pt]

& + &

p & \cdot & \texttt{(} q \texttt{)} & \cdot &

\mathrm{d}p ~ \mathrm{d}q

\\[4pt]

& + &

\texttt{(} p \texttt{)} & \cdot & q & \cdot &

\mathrm{d}p ~ \mathrm{d}q

\\[4pt]

& + &

\texttt{(} p \texttt{)} & \cdot & \texttt{(} q \texttt{)} & \cdot &

\mathrm{d}p ~ \mathrm{d}q

\end{array}\!\)

|

In short, \(\mathrm{r}(pq)\!\) is a constant field, having the value \(\mathrm{d}p~\mathrm{d}q\!\) at each cell.

Least Action Operators

We have been contemplating functions of the type \(f : X \to \mathbb{B}\!\) and studying the action of the operators \(\mathrm{E}\!\) and \(\mathrm{D}\!\) on this family. These functions, that we may identify for our present aims with propositions, inasmuch as they capture their abstract forms, are logical analogues of scalar potential fields. These are the sorts of fields that are so picturesquely presented in elementary calculus and physics textbooks by images of snow-covered hills and parties of skiers who trek down their slopes like least action heroes. The analogous scene in propositional logic presents us with forms more reminiscent of plateaunic idylls, being all plains at one of two levels, the mesas of verity and falsity, as it were, with nary a niche to inhabit between them, restricting our options for a sporting gradient of downhill dynamics to just one of two: standing still on level ground or falling off a bluff.

We are still working well within the logical analogue of the classical finite difference calculus, taking in the novelties that the logical transmutation of familiar elements is able to bring to light. Soon we will take up several different notions of approximation relationships that may be seen to organize the space of propositions, and these will allow us to define several different forms of differential analysis applying to propositions. In time we will find reason to consider more general types of maps, having concrete types of the form \(X_1 \times \ldots \times X_k \to Y_1 \times \ldots \times Y_n\!\) and abstract types \(\mathbb{B}^k \to \mathbb{B}^n.\!\) We will think of these mappings as transforming universes of discourse into themselves or into others, in short, as transformations of discourse.

Before we continue with this intinerary, however, I would like to highlight another sort of differential aspect that concerns the boundary operator or the marked connective that serves as one of the two basic connectives in the cactus language for zeroth order logic.

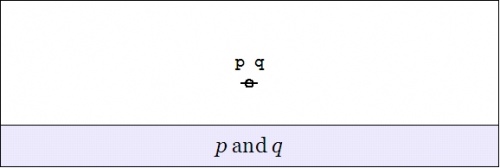

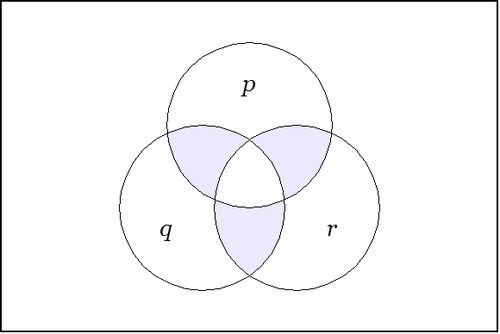

For example, consider the proposition \(f\!\) of concrete type \(f : P \times Q \times R \to \mathbb{B}\!\) and abstract type \(f : \mathbb{B}^3 \to \mathbb{B}\!\) that is written \(\texttt{(} p, q, r \texttt{)}\!\) in cactus syntax. Taken as an assertion in what Peirce called the existential interpretation, the proposition \(\texttt{(} p, q, r \texttt{)}\!\) says that just one of \(p, q, r\!\) is false. It is instructive to consider this assertion in relation to the logical conjunction \(pqr\!\) of the same propositions. A venn diagram of \(\texttt{(} p, q, r \texttt{)}\!\) looks like this:

In relation to the center cell indicated by the conjunction \(pqr,\!\) the region indicated by \(\texttt{(} p, q, r \texttt{)}\!\) is comprised of the adjacent or bordering cells. Thus they are the cells that are just across the boundary of the center cell, reached as if by way of Leibniz's minimal changes from the point of origin, in this case, \(pqr.~\!\)

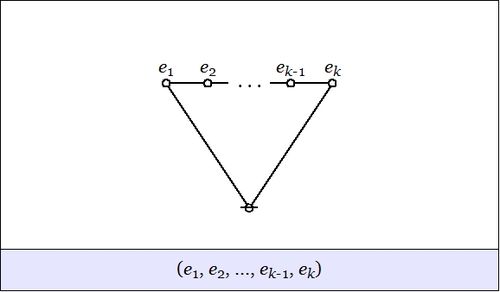

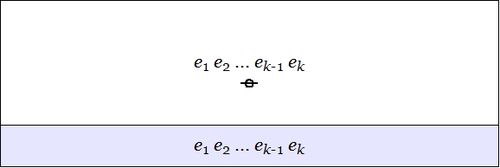

More generally speaking, in a \(k\!\)-dimensional universe of discourse that is based on the alphabet of features \(\mathcal{X} = \{ x_1, \ldots, x_k \},\!\) the same form of boundary relationship is manifested for any cell of origin that one chooses to indicate. One way to indicate a cell is by forming a logical conjunction of positive and negative basis features, that is, by constructing an expression of the form \(e_1 \cdot \ldots \cdot e_k,\!\) where \(e_j = x_j ~\text{or}~ e_j = \texttt{(} x_j \texttt{)},\!\) for \(j = 1 ~\text{to}~ k.\!\) The proposition \(\texttt{(} e_1, \ldots, e_k \texttt{)}\!\) indicates the disjunctive region consisting of the cells that are just next door to \(e_1 \cdot \ldots \cdot e_k.\!\)

Goal-Oriented Systems

I want to continue developing the basic tools of differential logic, which arose from exploring the connections between dynamics and logic, but I also wanted to give some hint of the applications that have motivated this work all along. One of these applications is to cybernetic systems, whether we see these systems as agents or cultures, individuals or species, organisms or organizations.

A cybernetic system has goals and actions for reaching them. It has a state space \(X,\!\) giving us all of the states that the system can be in, plus it has a goal space \(G \subseteq X,\!\) the set of states that the system “likes” to be in, in other words, the distinguished subset of possible states where the system is regarded as living, surviving, or thriving, depending on the type of goal that one has in mind for the system in question. As for actions, there is to begin with the full set \(\mathcal{T}\!\) of all possible actions, each of which is a transformation of the form \(T : X \to X,\!\) but a given cybernetic system will most likely have but a subset of these actions available to it at any given time. And even if we begin by thinking of actions in very general and very global terms, as arbitrarily complex transformations acting on the whole state space \(X,\!\) we quickly find a need to analyze and approximate them in terms of simple transformations acting locally. The preferred measure of “simplicity” will of course vary from one paradigm of research to another.

A generic enough picture at this stage of the game, and one that will remind us of these fundamental features of the cybernetic system even as things get far more complex, is afforded by Figure 23.

o---------------------------------------------------------------------o

| |

| X |

| o-------------------o |

| / \ |

| / \ |

| / \ |

| / \ |

| / \ |

| / \ |

| / \ |

| o G o |

| | | |

| | | |

| | | |

| | o<---------T---------o |

| | | |

| | | |

| | | |

| o o |

| \ / |

| \ / |

| \ / |

| \ / |

| \ / |

| \ / |

| \ / |

| o-------------------o |

| |

| |

o---------------------------------------------------------------------o

Figure 23. Elements of a Cybernetic System

|

Further Reading

A more detailed presentation of Differential Logic can be found here:

Document History

Differential Logic • Ontology List 2002

- http://web.archive.org/web/20140406040004/http://suo.ieee.org/ontology/msg04040.html

- http://web.archive.org/web/20110612001949/http://suo.ieee.org/ontology/msg04041.html

- http://web.archive.org/web/20110612010502/http://suo.ieee.org/ontology/msg04045.html

- http://web.archive.org/web/20110612005212/http://suo.ieee.org/ontology/msg04046.html

- http://web.archive.org/web/20110612001954/http://suo.ieee.org/ontology/msg04047.html

- http://web.archive.org/web/20110612010620/http://suo.ieee.org/ontology/msg04048.html

- http://web.archive.org/web/20110612010550/http://suo.ieee.org/ontology/msg04052.html

- http://web.archive.org/web/20110612010724/http://suo.ieee.org/ontology/msg04054.html

- http://web.archive.org/web/20110612000847/http://suo.ieee.org/ontology/msg04055.html

- http://web.archive.org/web/20110612001959/http://suo.ieee.org/ontology/msg04067.html

- http://web.archive.org/web/20110612010507/http://suo.ieee.org/ontology/msg04068.html

- http://web.archive.org/web/20110612002014/http://suo.ieee.org/ontology/msg04069.html

- http://web.archive.org/web/20110612010701/http://suo.ieee.org/ontology/msg04070.html

- http://web.archive.org/web/20110612003540/http://suo.ieee.org/ontology/msg04072.html

- http://web.archive.org/web/20110612005229/http://suo.ieee.org/ontology/msg04073.html

- http://web.archive.org/web/20110610153117/http://suo.ieee.org/ontology/msg04074.html

- http://web.archive.org/web/20110612010555/http://suo.ieee.org/ontology/msg04077.html

- http://web.archive.org/web/20110612001918/http://suo.ieee.org/ontology/msg04079.html

- http://web.archive.org/web/20110612005244/http://suo.ieee.org/ontology/msg04080.html

- http://web.archive.org/web/20110612005249/http://suo.ieee.org/ontology/msg04268.html

- http://web.archive.org/web/20110612010626/http://suo.ieee.org/ontology/msg04269.html

- http://web.archive.org/web/20110612000853/http://suo.ieee.org/ontology/msg04272.html

- http://web.archive.org/web/20110612010514/http://suo.ieee.org/ontology/msg04273.html

- http://web.archive.org/web/20110612002235/http://suo.ieee.org/ontology/msg04290.html

Dynamics And Logic • Inquiry List 2004

- http://stderr.org/pipermail/inquiry/2004-May/001400.html

- http://stderr.org/pipermail/inquiry/2004-May/001401.html

- http://stderr.org/pipermail/inquiry/2004-May/001402.html

- http://stderr.org/pipermail/inquiry/2004-May/001403.html

- http://stderr.org/pipermail/inquiry/2004-May/001404.html

- http://stderr.org/pipermail/inquiry/2004-May/001405.html

- http://stderr.org/pipermail/inquiry/2004-May/001406.html

- http://stderr.org/pipermail/inquiry/2004-May/001407.html

- http://stderr.org/pipermail/inquiry/2004-May/001408.html

- http://stderr.org/pipermail/inquiry/2004-May/001410.html

- http://stderr.org/pipermail/inquiry/2004-May/001411.html

- http://stderr.org/pipermail/inquiry/2004-May/001412.html

- http://stderr.org/pipermail/inquiry/2004-May/001413.html

- http://stderr.org/pipermail/inquiry/2004-May/001415.html

- http://stderr.org/pipermail/inquiry/2004-May/001416.html

- http://stderr.org/pipermail/inquiry/2004-May/001418.html

- http://stderr.org/pipermail/inquiry/2004-May/001419.html

- http://stderr.org/pipermail/inquiry/2004-May/001420.html

- http://stderr.org/pipermail/inquiry/2004-May/001421.html

- http://stderr.org/pipermail/inquiry/2004-May/001422.html

- http://stderr.org/pipermail/inquiry/2004-May/001423.html

- http://stderr.org/pipermail/inquiry/2004-May/001424.html

- http://stderr.org/pipermail/inquiry/2004-July/001685.html

- http://stderr.org/pipermail/inquiry/2004-July/001686.html

- http://stderr.org/pipermail/inquiry/2004-July/001687.html

- http://stderr.org/pipermail/inquiry/2004-July/001688.html

Dynamics And Logic • NKS Forum 2004

- http://forum.wolframscience.com/showthread.php?postid=1282#post1282

- http://forum.wolframscience.com/showthread.php?postid=1285#post1285

- http://forum.wolframscience.com/showthread.php?postid=1289#post1289

- http://forum.wolframscience.com/showthread.php?postid=1292#post1292

- http://forum.wolframscience.com/showthread.php?postid=1293#post1293

- http://forum.wolframscience.com/showthread.php?postid=1294#post1294

- http://forum.wolframscience.com/showthread.php?postid=1296#post1296

- http://forum.wolframscience.com/showthread.php?postid=1299#post1299

- http://forum.wolframscience.com/showthread.php?postid=1301#post1301

- http://forum.wolframscience.com/showthread.php?postid=1304#post1304

- http://forum.wolframscience.com/showthread.php?postid=1307#post1307

- http://forum.wolframscience.com/showthread.php?postid=1309#post1309

- http://forum.wolframscience.com/showthread.php?postid=1311#post1311

- http://forum.wolframscience.com/showthread.php?postid=1314#post1314

- http://forum.wolframscience.com/showthread.php?postid=1315#post1315

- http://forum.wolframscience.com/showthread.php?postid=1318#post1318

- http://forum.wolframscience.com/showthread.php?postid=1321#post1321

- http://forum.wolframscience.com/showthread.php?postid=1323#post1323

- http://forum.wolframscience.com/showthread.php?postid=1326#post1326

- http://forum.wolframscience.com/showthread.php?postid=1327#post1327

- http://forum.wolframscience.com/showthread.php?postid=1330#post1330

- http://forum.wolframscience.com/showthread.php?postid=1331#post1331

- http://forum.wolframscience.com/showthread.php?postid=1598#post1598

- http://forum.wolframscience.com/showthread.php?postid=1601#post1601

- http://forum.wolframscience.com/showthread.php?postid=1602#post1602

- http://forum.wolframscience.com/showthread.php?postid=1603#post1603

|